Why it took me 6 years to publish a paper!? A blog companion to Conditional Process Analysis for Two Instance Repeated-Measures Designs

- Amanda Montoya

- Oct 31, 2024

- 10 min read

Every paper has a story behind it, and I believe these stories are important to tell. Telling the story of a paper helps me to reflect on the process of generating the research. These stories may also help others to learn more about how research is conducted, and see behind the curtain of academic papers. A lot happens that doesn't end up in the final manuscript, so I hope to use these blogs to tell each paper's untold story. Today I’m writing about a paper that was recently accepted at Psychological Methods titled “Conditional Process Analysis for Two-Instance Repeated-Measures Designs.” I assume the paper will be published in late 2024 or early 2025. I will update this blog with a link once the paper is out. For now, here's the preprint: https://osf.io/preprints/psyarxiv/wrtb6.

This paper is primarily a product of my dissertation, which I defended at The Ohio State University in June of 2018. One might ask why it took six years to get from a defended dissertation to a published paper, and that is what I’ll focus on in this blog: why do things often take longer than we expect? While I cannot say why this happens for all cases, I can provide some context for why that happened in this case. I see this delay as having been a product of a number of combined factors: 1) I started a faculty job very shortly after my dissertation defense and got very overwhelmed with my other duties in this position, 2) We [my advisor and I] had other papers we thought should be published first, so they were prioritized over this paper (for a time), and 3) This paper contains a big development in a computational tool which is meant to be released with the paper, my macro MEMORE, which required a lot of extra time to implement.

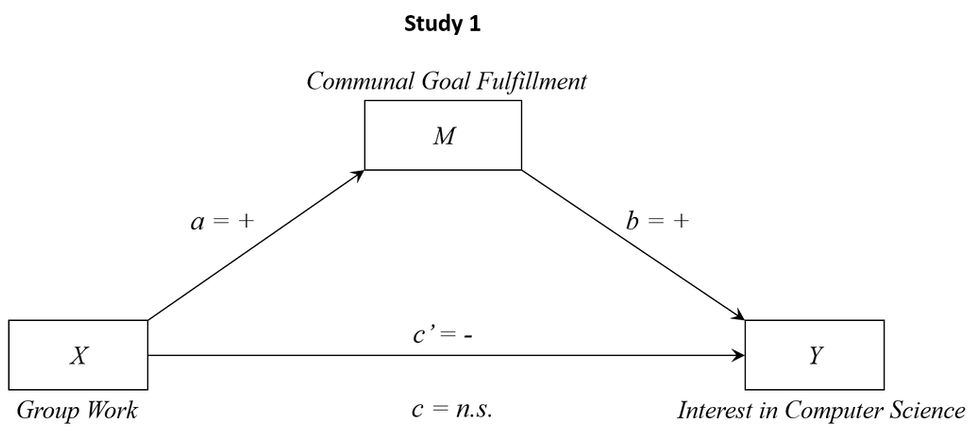

I had two different ideas for what I might do for my dissertation, but when I received a job offer I knew I needed to pursue the idea that would take less time. While in graduate school, I developed new methods for testing mediation (Montoya & Hayes, 2017) and moderation (Montoya, 2018) for two-instance repeated-measures designs. "What are those?" you might ask. I’ve long been frustrated by the lack of clarity in language for repeated-measures designs, but I think the simplest way to phrase this is that if you would test a “total effect” (the effect of X on Y) using a paired t-test, then you would use this method to test mediation of the effect (X->M->Y). I got into this work because when I was in undergrad, I ran a between-subjects experiment and used one of Andrew Hayes’s macros (MEDIATE) to test mediation. We then replicated the study using a within-subject design, but could not figure out how to test the mediation. It felt unusual to see that two very similar designs, which are both taught in introductory research methods courses, would have this kind of discrepancy in what methods were available for testing mediation. A mentor, Tony Greenwald, pointed me to a paper by Judd, Kenny, and McClelland (2001), which I read but found incomplete. There was no quantification of the indirect effect like we get in between-subject designs and no bootstrap confidence interval method. I reached out to Dr. Hayes, and he expressed that he had no solution but that it was an interesting problem. When I asked around among other social psychologists, they expressed a similar frustration to mine about the lack of methods available for within-subject designs. When I looked at the literature I saw people applying very unusual methods, and this concerned me greatly. This problem, it felt like to me, was very solvable and would impact the research of many people. That's the kind of problem I'm interested in solving. I then applied to work with Dr. Hayes in graduate school, proposing to focus on this specific topic. It's a small thing, but I would like to note: many people told me that my interests would change in graduate school, and for many of my peers it did, but I stuck tightly to my plan, and I think it’s worthwhile to acknowledge that your interests may not change, and that’s okay too. Ultimately my dissertation focused on furthering these developments to moderated mediation in two-instance repeated-measures designs.

It may be useful to discuss how each part of the dissertation played into this paper. A dissertation is typically many chapters, and for most dissertations they may contain research which could amount to multiple papers. For some people, those papers are already written and published, for some they are papers that will be pursued after the defense, and for some it is a mix. For me it was a mix. The first chapter outlined approaches for mediation, moderation, and moderated mediation (or conditional process analysis) in between-subject designs. This read very much like a review paper and while it did not contribute anything new, it allowed me to express the ideas in my own words, and establish a nomenclature and notation that I would use moving forward. The second chapter covered mediation and moderation in two-instance repeated measures designs. It was in many ways a re-hashing of two papers already published (Montoya & Hayes, 2017 and Montoya, 2018). The third chapter overviews the conditional process analysis models for the two-instance repeated measures design, and largely contributed to the core content of this upcoming paper in Psychological Methods. Looking back at the outline, I kept much of the structure the same, but also cut quite a bit of material that I found interesting at the time, but was maybe not as practically important for applied researchers (e.g., Models where Instance is not a Moderator). The fourth chapter was 3 applied examples, one of which made it into the final paper, one was moved to an appendix for the final paper, and one which was dropped entirely. Chapter 5 discussed alternative analytical approaches, which was 26 pages in the dissertation, and was ultimately cut down to a few short paragraphs in the discussion section of the paper. Chapter 6 was a general discussion, some of which was also ported over to the general discussion in the paper. Ultimately, the dissertation was 163 pages, but the paper ended up being about 60 pages (15,000 words), so most of the work I did was cutting material out and rewriting to organize ideas more succinctly.

One reason that things moved very slowly on this paper was that there were a few papers that Dr. Hayes and I wanted to prioritize publishing first. Prior to starting work on my dissertation, I had started a paper with Dr. Hayes focused on a special case of these moderated mediation models. Progress on that paper was very slow and the paper was rejected at multiple journals. At that point, my focus then shifted to another single author paper that was focused on mediation. It was essentially the second paper to come out of my first year project, so I felt it was more important to get out first than the moderated mediation. That paper went under review in 2020 and was ultimately accepted in 2022 (Montoya, 2023). It was around then that I picked up work again on the paper that is the focus of this blog. Often when I am reflecting on how long a task will take, I am only thinking of the actual time dedicated to the task. Largely, from 2018 - 2022 very little time was dedicated to this paper. I had other things that were a higher priority, and this process was a useful learning opportunity to consider that the time it takes before a task is done is a combination of time spent prioritizing other things over the specific task and the actual time to finish the task. Not just the latter.

One reason for the delay of publication that I mentioned was the tool that was meant to be attached to the paper. With the previous papers, I had developed a macro MEMORE, available for SPSS and SAS, which fit the models described in the papers. Because my dissertation process was very accelerated (I proposed in January 2018 and defended in May 2018), I did not do any of the software implementation as part of my dissertation, just the theoretical work and a few applied examples with real datasets. So, one of the biggest barriers for the submission of this paper was a working version of MEMORE with all the new moderated mediation models. I found it very hard to find time to work on programming, as I would often need long periods of work to get focused and remember all the finer details of what it is I was working on. Most people who have started a faculty position with many different responsibilities (teaching, mentorship, service, lab management) know that our time is distributed very differently than a typical graduate student. I found it difficult to find the time and make real progress on MEMORE. I tried many different strategies to get the work done, like scheduling big blocks of time once a week and setting aside whole weeks during the summer. I didn’t start to make real progress until I hired a research assistant who was assigned to test MEMORE (shout out to Nickie Yang). This gave me a new level of accountability, knowing I needed to get a new version done for her to test before our meeting. She would spot problems and was great at identifying when certain issues occurred, which I would then fix for the following week. The first beta release of MEMORE Version 3 came out in January 2022. But this version only included SPSS. I spent some time revising issues that arose in the SPSS version while working with beta testers – mostly people who emailed me asking if moderated mediation was possible. The SAS version was very slow to come because Dr. Hayes wrote the first SAS version of MEMORE for our 2017 paper, and I was not as comfortable with SAS as I was with SPSS. Overtime I was also noticing that very few people who emailed me for help with MEMORE were SAS users, leading me to think that I either didn't have a lot of SAS users or maybe they were more savvy and didn't need help. As such, I found it hard to stay motivated to work on the SAS version. The first version of MEMORE V3 for SAS was given to a small workshop I led in September 2024, so very recently. Summer of 2024 was largely focused on getting this done, knowing that the manuscript was close to acceptance.

In December of 2022, I copied the chapters from my dissertation that corresponded to this paper (primarily Chpt 3 - 6) and started working in earnest on getting it ready for publication. I typically have a specific project that is at the “top of my list.” This is the paper that gets my dedicated writing time, and I read papers related to that paper during this time as well. I think I can say that this was around when this paper became my top priority. In May of 2023, I submitted the paper for the first time to Psychological Methods. The first decision was a major revision, which came to me in November of 2023 (so about 6 months under review). The reviews were relatively simple, but perhaps the most difficult issue to tackle was one of discussing causal inference. Because this design is one that is not discussed very often, there is limited research on causal inference for these designs for mediation alone and absolutely nothing for moderated mediation. I went back and forth on whether to add formal derivations, which I didn’t feel prepared to do on my own and would add a lot of length to an already lengthy paper. Ultimately, I decided to add sections on the issue throughout the manuscript, descriptions for each applied example, and acknowledge that much more needs to be done in this area in the future. I hope to continue this work soon.

I resubmitted to Psychological Methods in April 2024. Looking back now I’m wondering why it took me 6 months to do a relatively straight forward revision. One issue that I have is that I often prioritize work with colleagues over work that is only mine. So, during this period I was primarily focused on other projects, including those led by my graduate students, and it took a while before this ended up on the top of the pile again. I frequently find myself thinking, when I am doing solo authored work, that I don’t want to do this again. This paper will be my third solo authored paper, and I think at this point there is no reason for me to be concerned that outside reviewers may question my independent contributions to the field. I ultimately don’t think this is what motivates me to work on solo authored papers now, even though it was when I started my first one. But sometimes it is nice to work on something completely your own. It helps me build up my sensibilities of when a paper is ready, what it needs, and self-editing. When you don’t have someone else that you’re bouncing ideas off of, it’s hard, but it also improves your ability to produce research and think independently. So, while right now I think I’ll take a break from solo work, I think it’s likely that I’ll start something like this again in a few years.

The paper received minor revisions in August 2024: the primary issue was length, so I cut down the paper quite a lot. It was accepted in September 2024. I’m very excited that this work is going to be reaching audiences soon (though technically the preprint is already out), and even though it’s taken many years for the work to get out, I also feel like I’ve learned a lot along the way. I’ve found better ways to prioritize tool development, improved my understanding of the causal inference literature, and improved my ability to push forward a project independently. Below I summarize the submission timeline for the project. I feel very lucky that this paper got picked up by the first journal I submitted to, as this is not always the case, and can add a lot of time to the timeline. The preprint with the different revisions is available here: https://osf.io/preprints/psyarxiv/wrtb6.

Publication Timeline | ||

Action | Journal | Date |

Project Completed | Dissertation | May 2018 |

Submitted | Psychological Methods | 5/12/2023 |

Major Revisions Decision | Psychological Methods | 11/14/2023 |

Submitted | Psychological Methods | 4/8/2024 |

Minor Revisions Decision | Psychological Methods | 6/18/2024 |

Submitted | Psychological Methods | 8/2/2024 |

Accepted | Psychological Methods | 9/18/2024 |

Journals Submitted to: 1

Months Under Review: 9

Comments